Coming Soon: The ‘Semi-Professional Pilot’?

An opinion writer for Forbes has postulated that the future of the pilot trade is just that, “semi-professional” monitors of autonomous machines that actually discourage human intervention because we are…

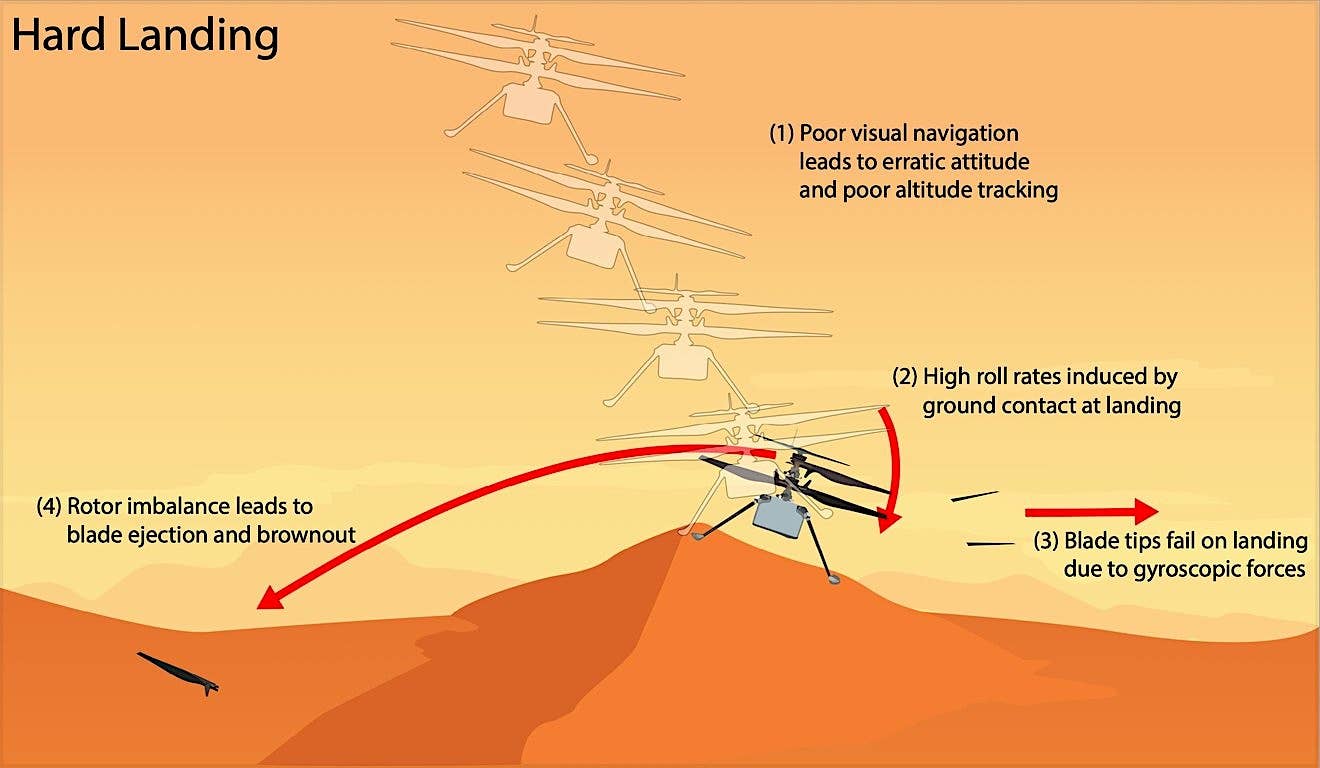

An opinion writer for Forbes has postulated that the future of the pilot trade is just that, “semi-professional” monitors of autonomous machines that actually discourage human intervention because we are so sloppy. In a column, Paul Kennard says humans are too imprecise to get the best performance and longevity out of aircraft systems and cost too much to train to fit the razor-thin margin world of modern low-cost air travel and he believes the burgeoning urban mobility industry provides the answer to both practical application and societal acceptance.

"The compromise then, perhaps, is the semi-professional pilot,” Kennard wrote. “One that never follows a conventional path to a qualification by learning how to fly ‘stick and rudder’ piston trainers, but instead does a zero flight time course in an urban air mobility platform simulator complex. In much the same way that Uber and the smartphone have undermined ‘the knowledge’ required by yellow cab drivers, automation and UAM will likely do the same to the aviation workplace.”

By way of example, Kennard points to SpaceX’s Crew Dragon, which splashed down successfully Sunday in precisely the right place at the exact time while two astronauts watched their fate unfold on high-resolution screens. They had the ability to step in if critical functions didn’t happen on schedule. Even their seats reclined automatically. He said the airlines and manufacturers are watching all this closely, particularly the urban air mobility industry. “Once UAM is proven, licensed and demonstrably safe, airlines will start asking for the some of their platforms to be similar. Future inter-city aircraft will take the UAM approach and scale it up,” he wrote.